Google I/O 2024, the company’s annual conference where it showcases its latest innovations, technologies, and its outlook into the future has just started and as expected, it’s all about AI. In particular, Gemini. Most of it was actually Gemini being integrated into its services like Meet, Gmail, Photos, and of course, Search, as well as how it can take care of the nitty gritty in say, planning for a trip, summarizing your emails and video meetings, finding specific information without having to scour through tons of photos, and so much more.

Here’s what we think are the best bits of this year’s Google I/O.

Gemini 1.5 Pro

Gemini 1.5 Pro offers the longest contextual window of any chatbot and has the ability to process extensive data of multiple types, be it text input, images, documents, spreadsheets, audio, and even video.

Ask Photos with Gemini

With Gemini integrated into Photos, you no longer need to search through tons of photos just to find say, your license plate number. All you have to do, is ask. You can even do more complex searches that involve historical information, and Gemini will go through your photos to find useful information and generate a summary. This will be rolled out in a future date with even more capabilities.

Gmail and Meet

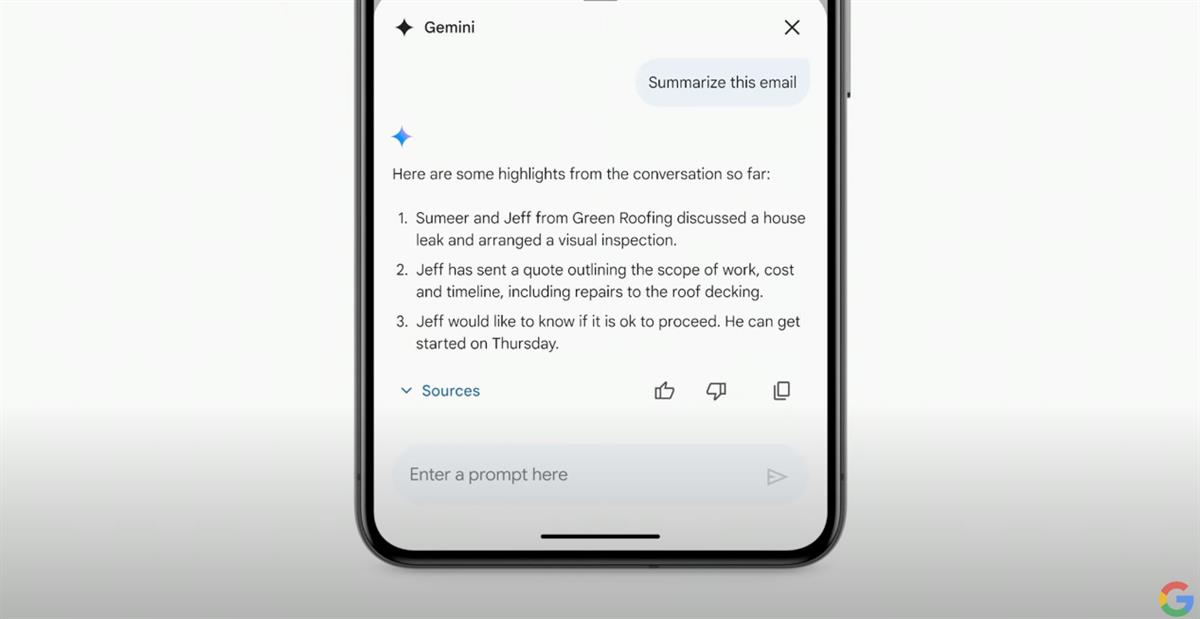

Say there’s been an exchange of emails that you haven’t been able to track. Gemini can easily generate a summary of what has happened based on multiple emails. (This can also be done via the Gmail app).

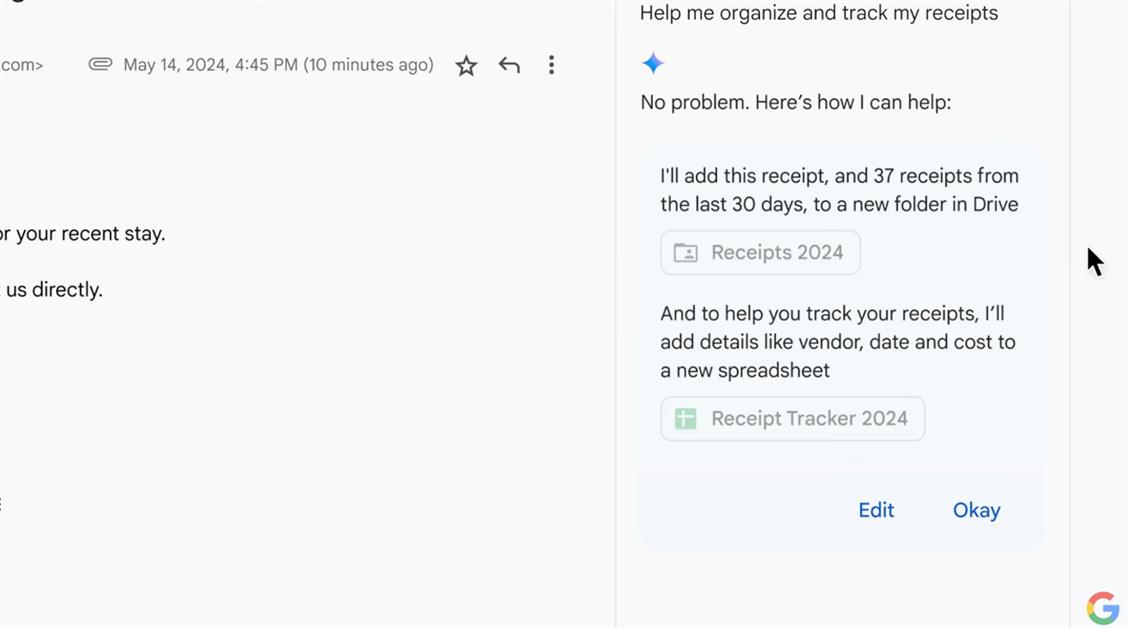

You can also ask Gemini to organize a bunch of documents, say receipts, and create a spreadsheet for tracking.

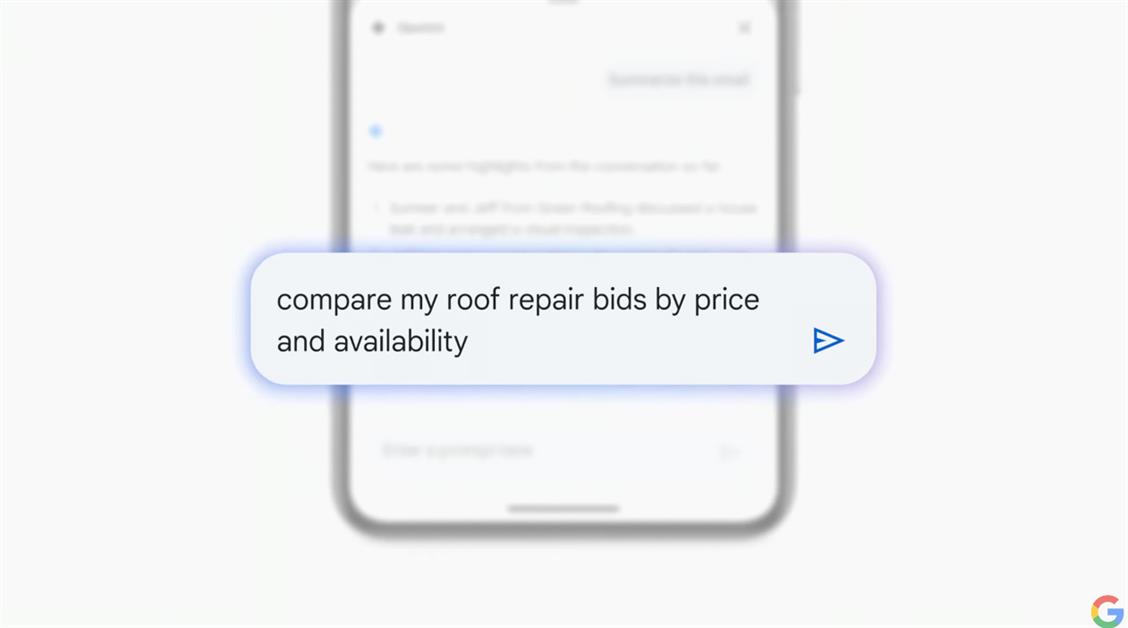

Using the Gmail app, you can even give additional instructions such as comparing information, all without having to go back and open each email individually, and when you do, you’ll can choose from suggested smart replies.

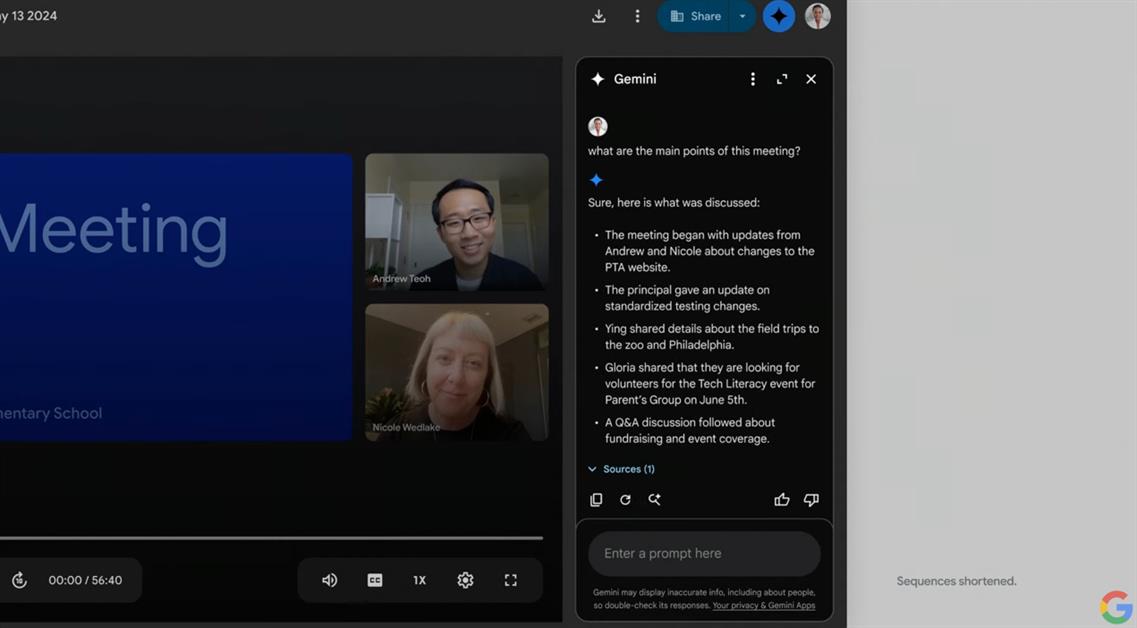

For meetings done via Google Meet, you can ask Gemini to give you the highlights, saving you the time of having to go through every minute just to backtrack.

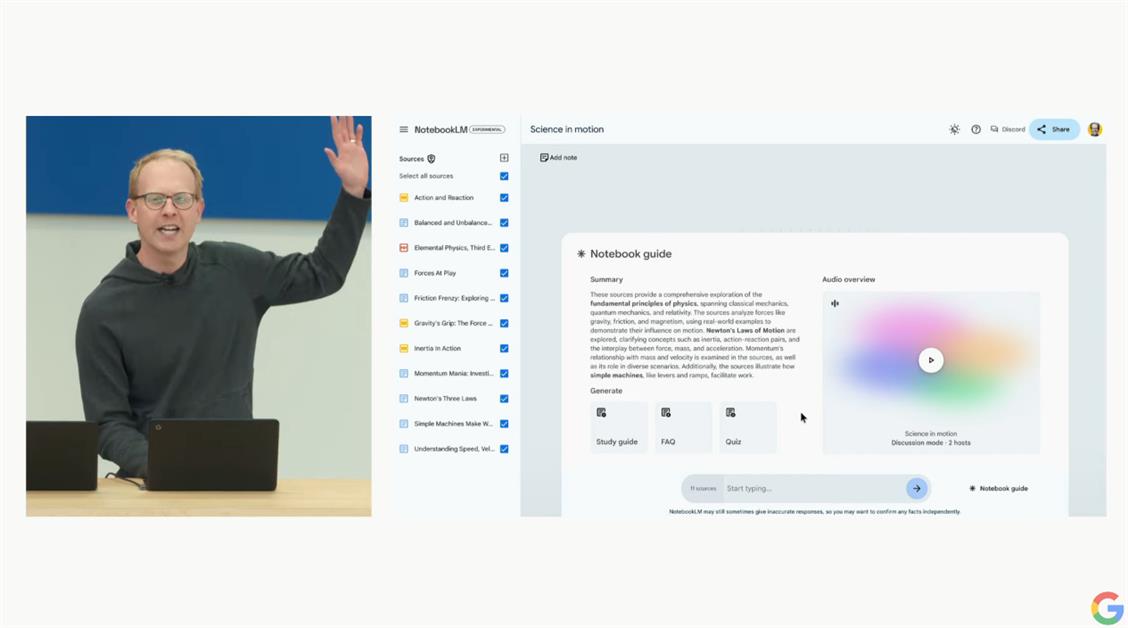

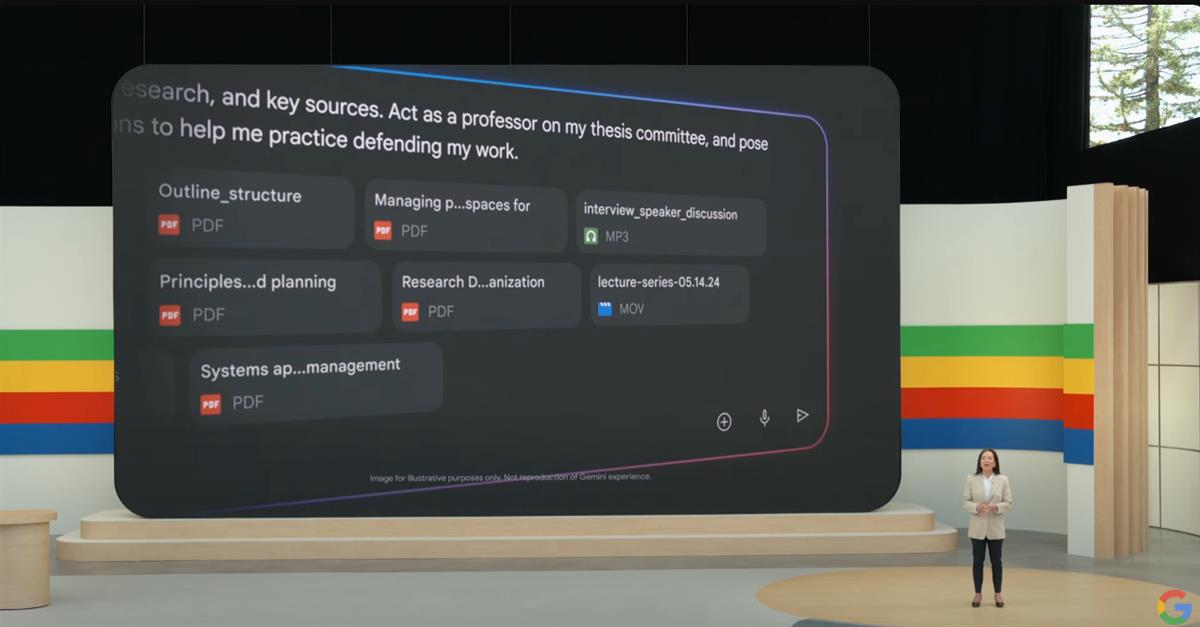

NotebookLM + Gemini 1.5 Pro

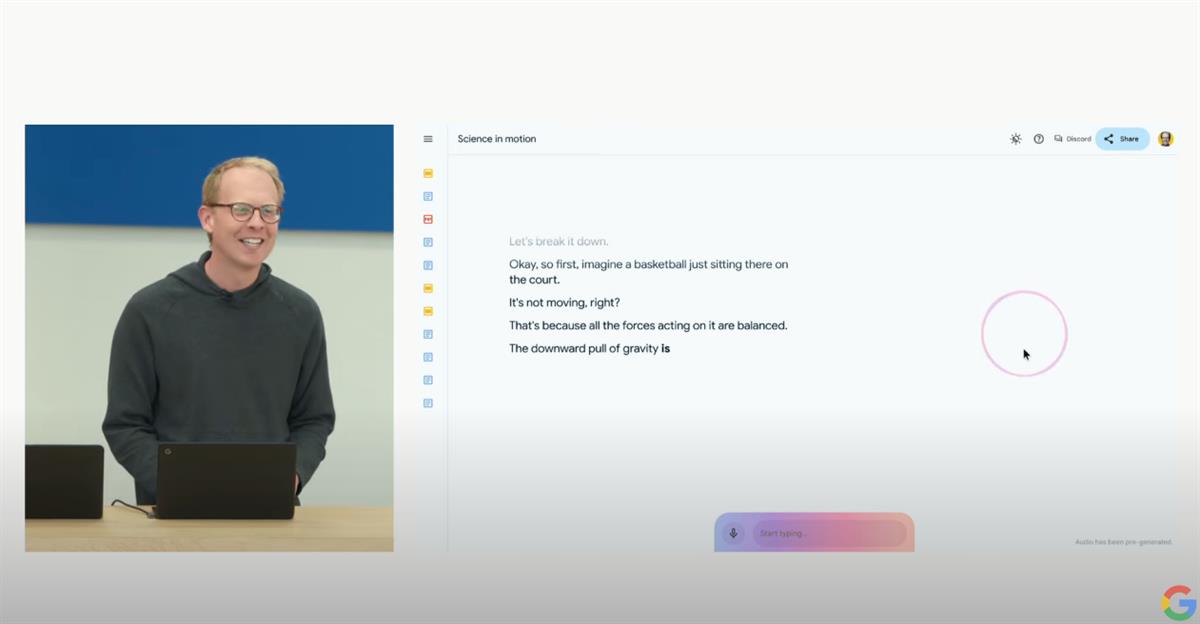

With Gemini 1.5 Pro and NotebookLM, it’s now possible to pool multiple types of resources (ex: documents, spreadsheets, pdf files, and more) to generate a Notebook Guide which contains sections for learning such as FAQs, a Study Guide, and even quizzes. It can also generate an audio material based on what’s uploaded, for a more interactive learning session.

What’s even better is that you can actually intervene and personalize the material say, ask it to discuss from the perspective of a game or sport or whatever you want, basically, to make things easier to understand on the part of the learner.

Gemini 1.5 Flash and Project Astra

Gemini 1.5 Flash is a lighter version of Gemini 1.5 Pro, and is designed to be fast and more cost-effective, powered by multimodel reasoning. It works best for use cases that require low latency and high efficiency.

Google’s vision of the future AI assistant, Project Astra involves building a universal AI agent that is highly adaptive. Something that can remember what it sees, is proactive, and can learn, being able to respond at a moment’s notice even with continuously changing inputs. More importantly, it should be something that can also converse. If the demo above is any hint of what’s coming, then we’re all for it.

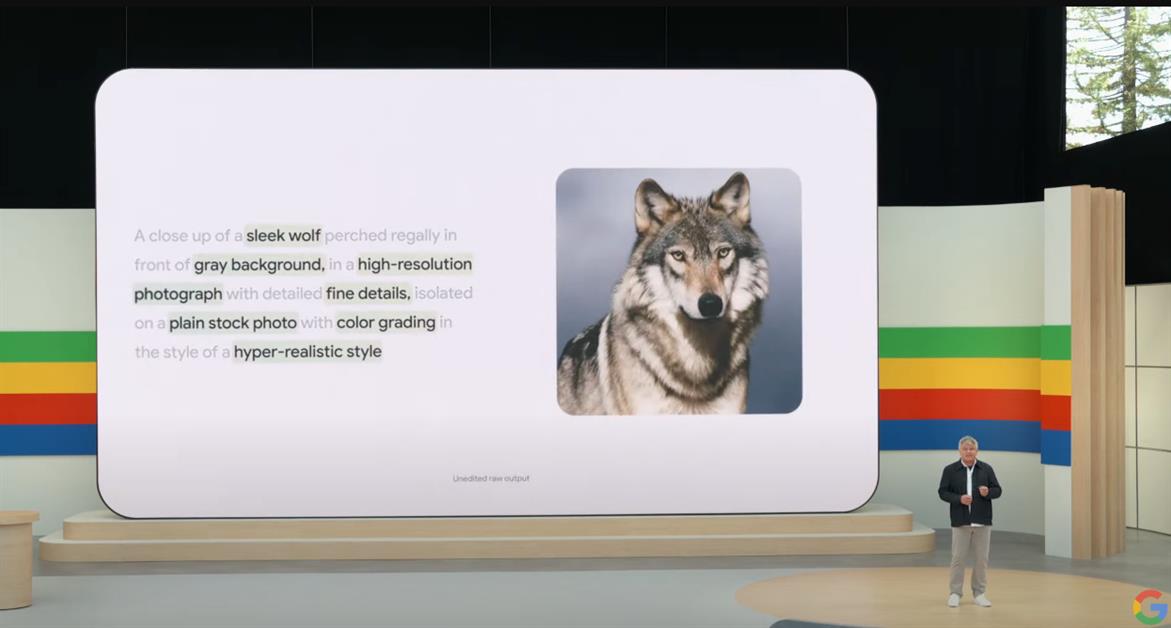

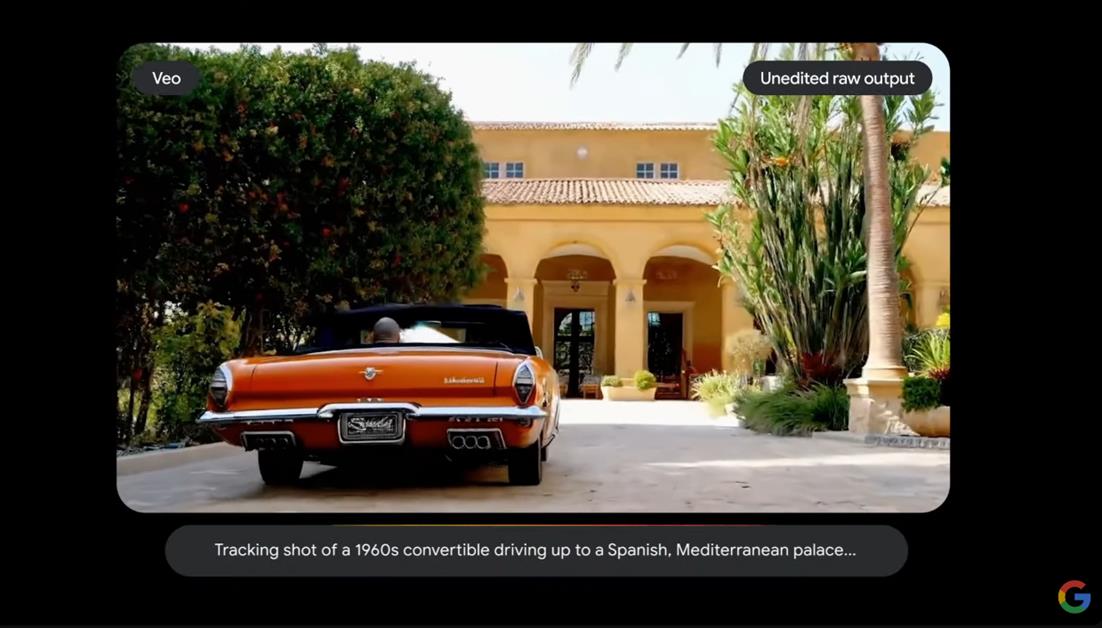

Imagen 3 and Veo

Google introduced Imagen 3, its most capable image generation model, allowing for more photorealistic output, improved details, and less visual artifacts, while also being better at understanding prompts. The more detailed you get, the better.

Veo, on the other hand, is Google’s most capable video generative model, allowing for 1080p video output from text, image, and video prompts. To try these out, you may join the waitlist at LABS.GOOGLE.

Google Search

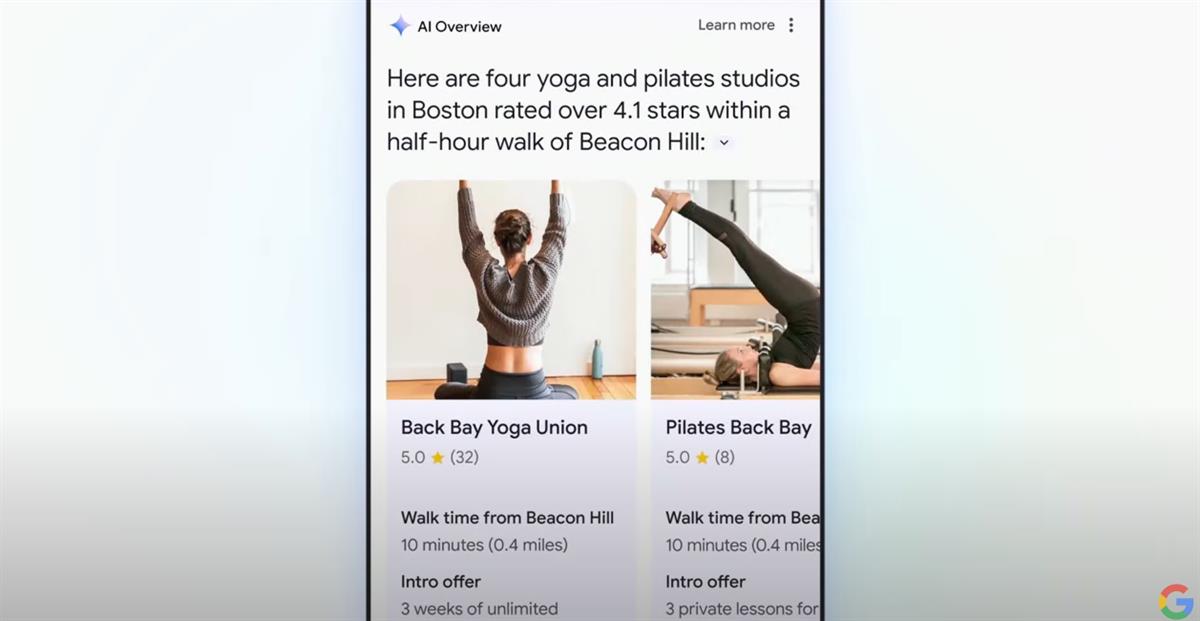

AI Overviews

AI Overviews gives your questions the answers it needs and so much more. It gives you links to resources, and ideas from various perspectives. Thanks to Multi-step reasoning, you can even ask multiple questions all at once. Google then fetches information from various sources like the web, maps, and more, and puts everything together in a well-organized summary. This feature is initially rolling out to users in the US.

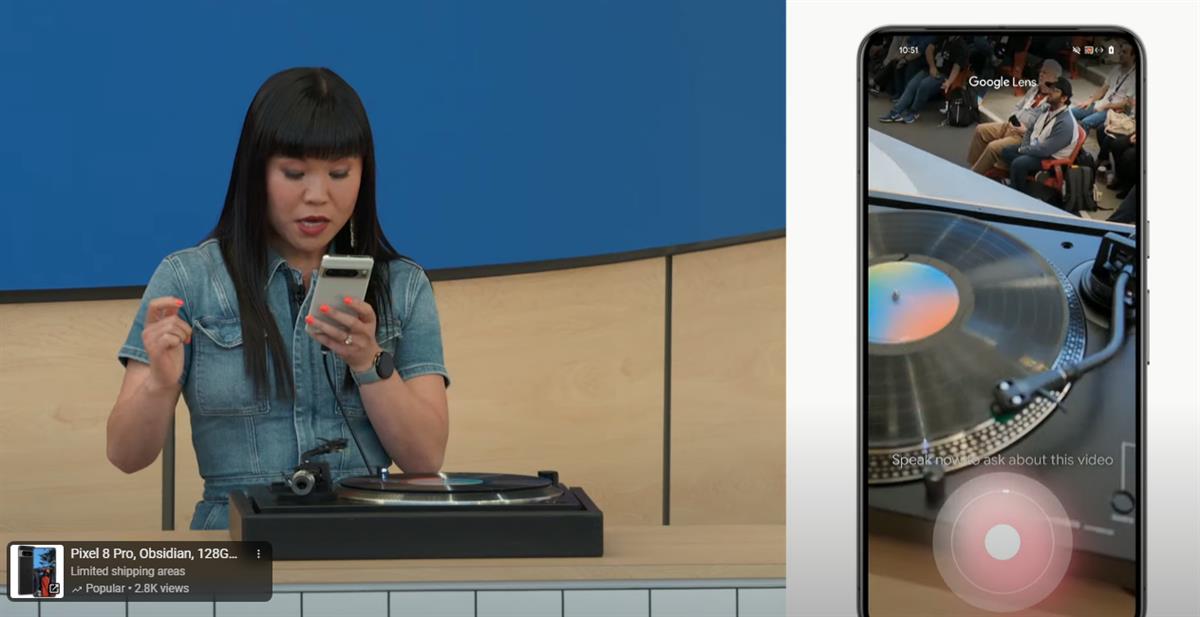

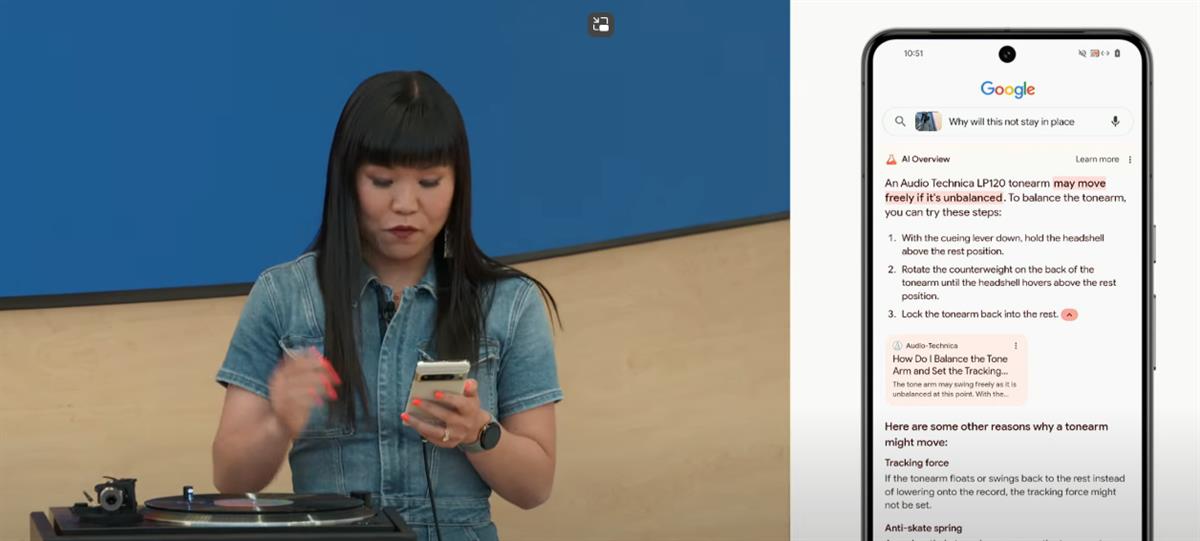

Ask with video

Soon, you’ll be able to ask questions via video on Google Search. The demo showed a lady asking how to fix a problem with a turntable by shooting a video via Google Lens, and an AI Overview was generated with the exact model name of the device, and the steps that she could try, along with relevant links.

Gemini App

Gemini Live

Thanks to Google’s latest speech models, you’ll soon be able to talk to Gemini using voice commands. The nice thing about it is that you can freely interrupt even while the AI is responding, and it will also be able to adapt. With Project Astra, Gemini will also be able to see what you see and respond to what’s happening.

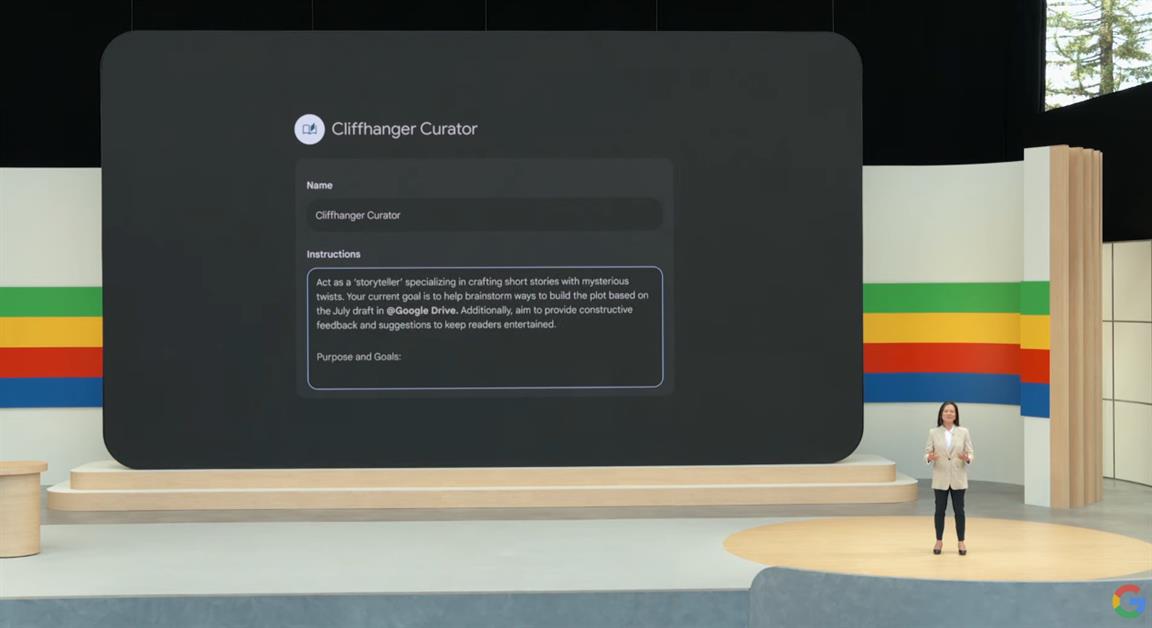

Gems

Gems are like bookmarks. Only that now, they hold prompts. You can re-use them again and again as you please, all without having to enter say, a long prompt each time.

Example: Vacation Planning

With complex prompts, Gemini gathers information from multiple sources like Gmail and Maps, and looks at whatever constraints you put in to give you an actual itinerary. You can even make adjustments to say, the time, and Gemini will intelligently adjust the schedule of your activities, adapting to you.

Example: Analysis

You can upload multiple documents and have Gemini analyze them to generate suggestions and more.

Android with AI at the Core

AI-Powered Search

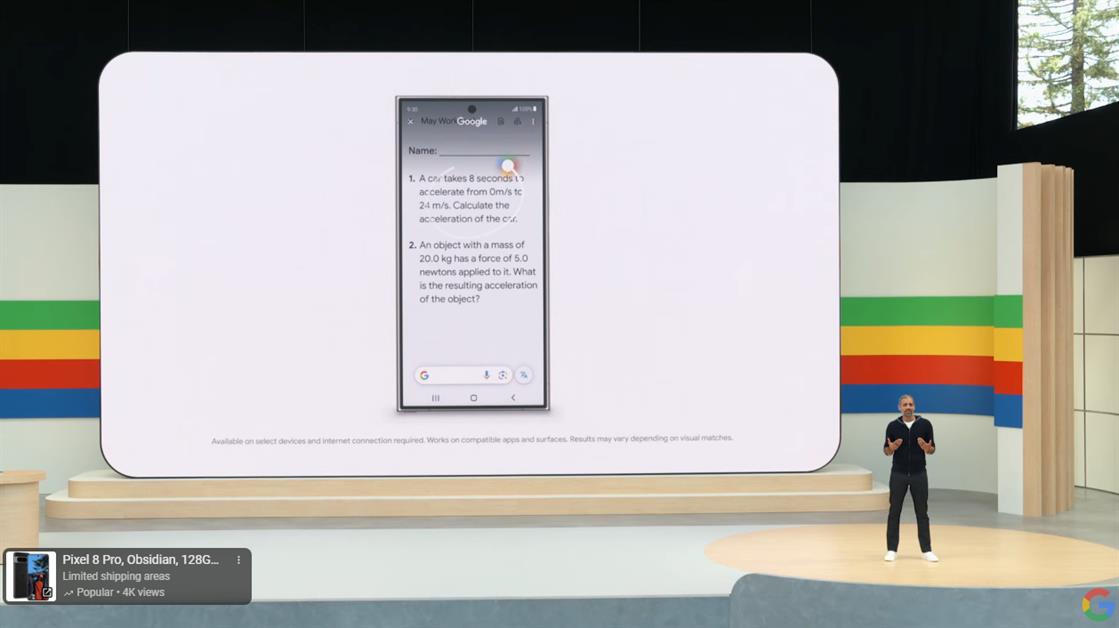

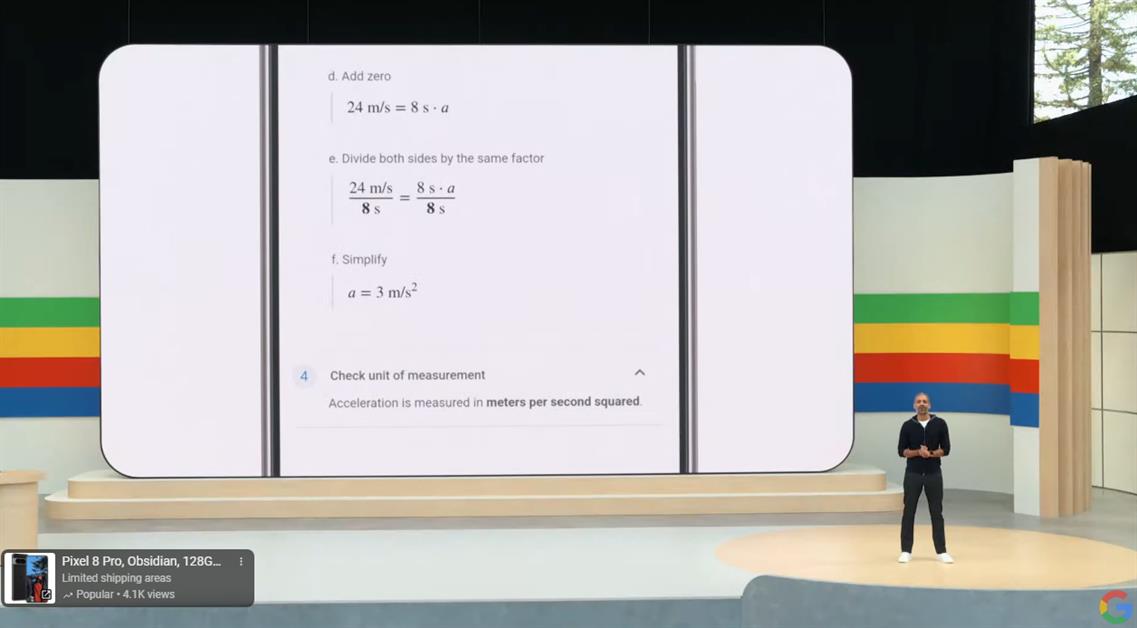

Introduced earlier this year at Samsung Unpacked, Circle to Search offers a more intuitive and faster way to look for information in an instant, translate, and more.

Now, Circle to Search can be used on questions, such as those which require a mathematical solution. What is even more exciting is that later this year, it will also be handle more complex problems, such as those that make use of diagrams.

According to Google, Circle to Search is already available on more than 100 million devices and is expected to become available to 200 million devices by the end of 2024.

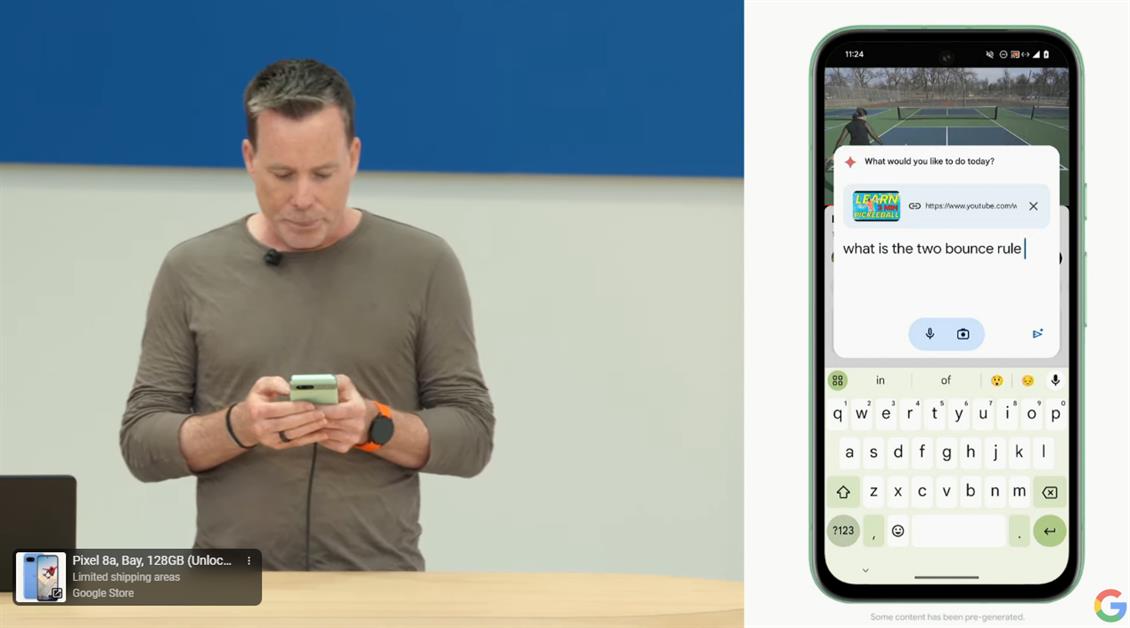

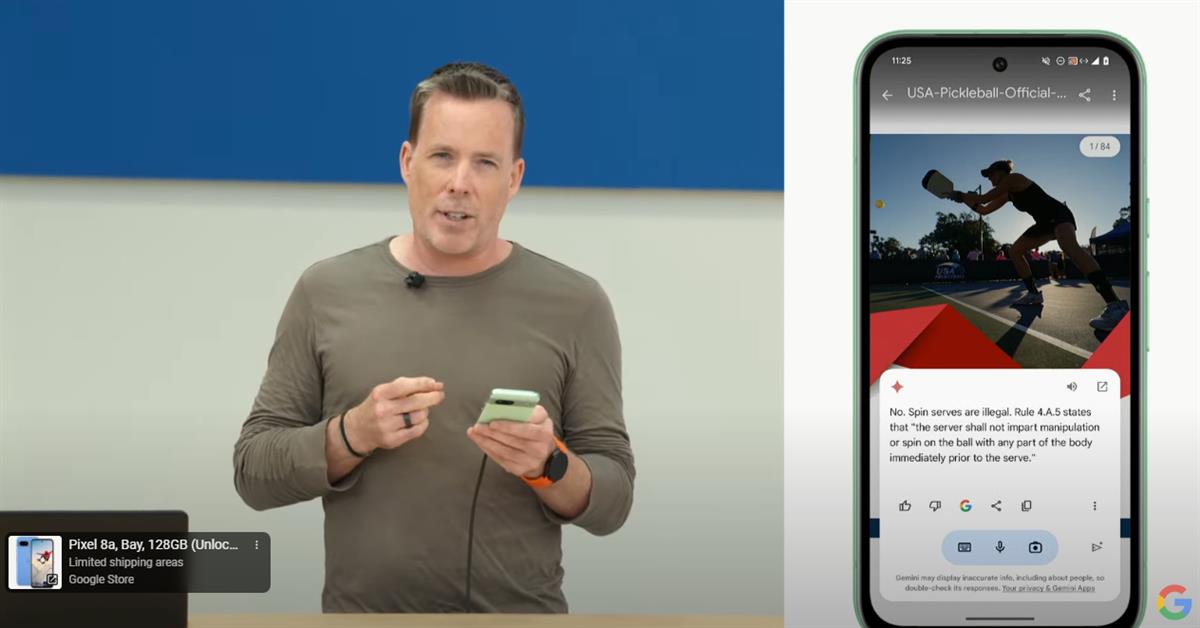

Gemini as Your AI Assistant

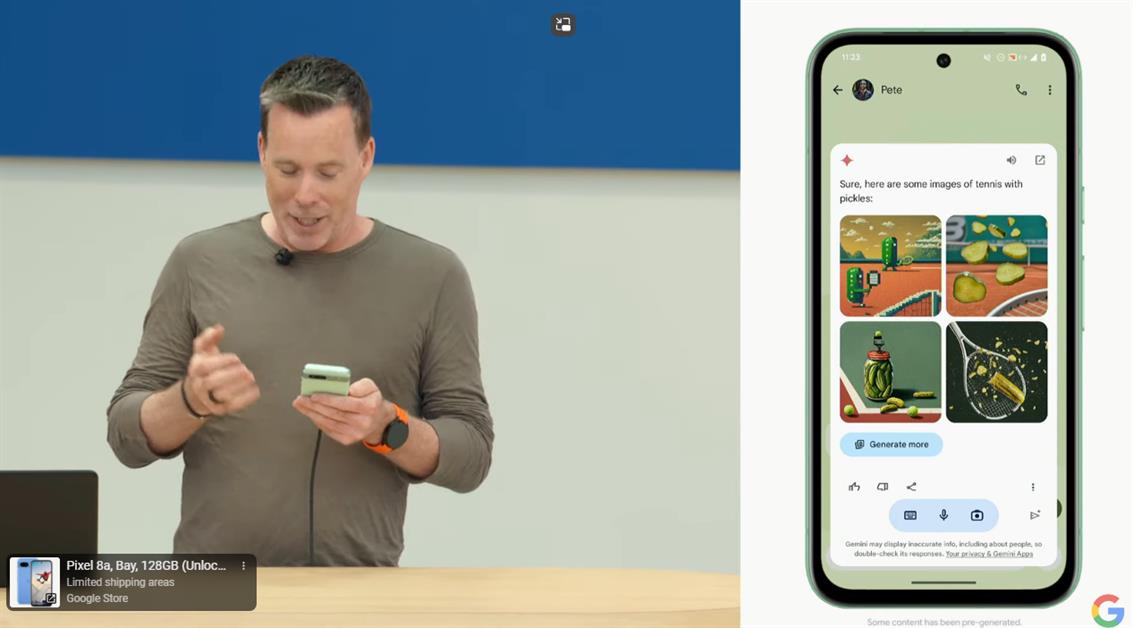

Gemini works on a system level, which means you can bring it up even when you’re on another app. Now, it’s also context aware, which means it analyzes what you want to do, and gives suggestions accordingly.

For example, you can bring up Gemini to generate images when chatting with someone, and you can drag-and-drop these images to the app. When watching a video, you can also pull up Gemini to ask questions about it.

Don’t want to browse through a 30-page document? open Gemini and find what you’re looking for in an instant within the document.

On-Device AI

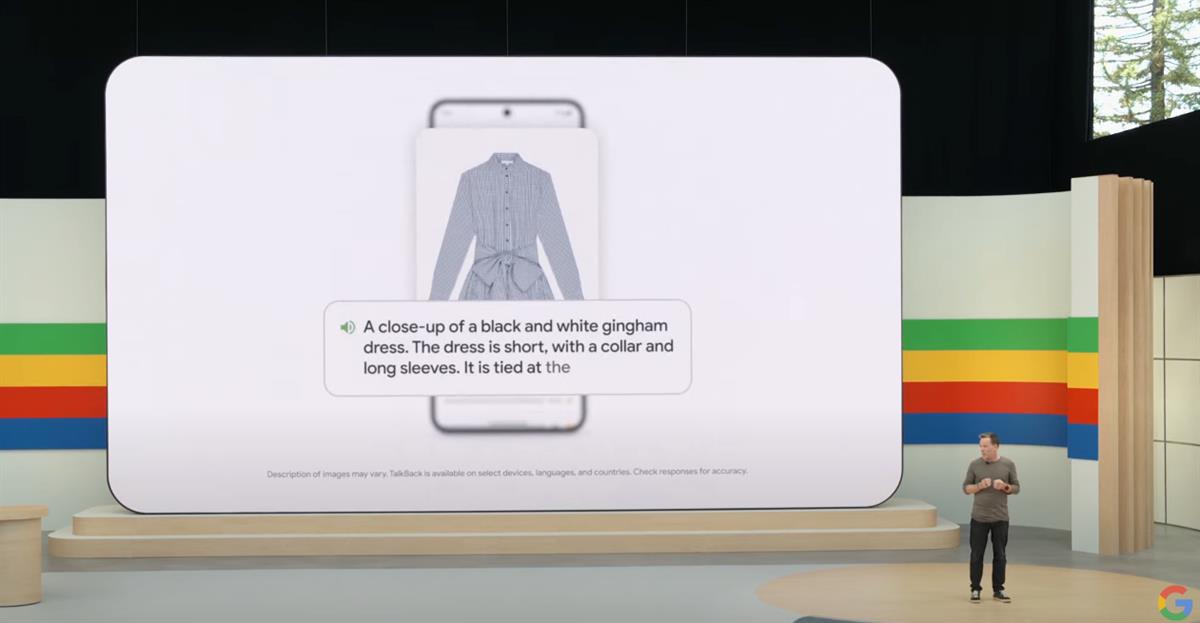

Android is the first mobile operating system with a built-in on-device foundation model. This allows for a faster AI experience while keeping your data private.

Coming to Pixels later this year, Gemini Nano with Multimodality lets your phone understand not just text input, but also visual and audio and spoken language. For example, TalkBack will be able to deliver more detailed descriptions of photos, and your phone can immediately warn you of a scam call just by hearing and analyzing what the other person is saying (This feature is currently in testing).

Android 15 Updates (Android 15 Beta 2)

Google is set to reveal more information on Android 15 tomorrow, so stay tuned.

Emman has been writing technical and feature articles since 2010. Prior to this, he became one of the instructors at Asia Pacific College in 2008, and eventually landed a job as Business Analyst and Technical Writer at Integrated Open Source Solutions for almost 3 years.