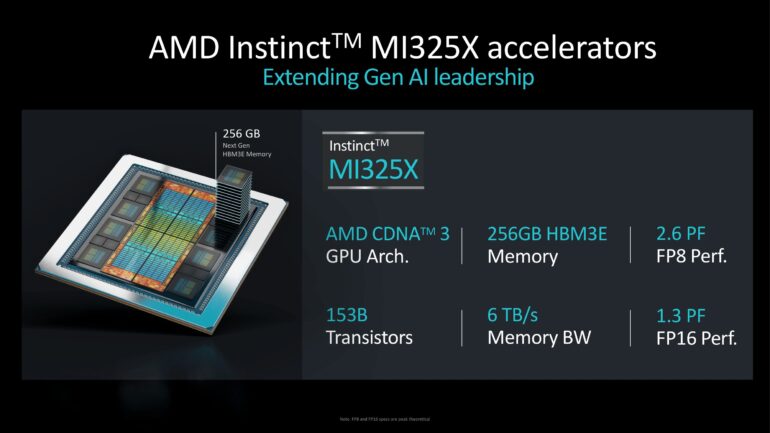

AMD has been keeping up with its competitors following the launch of the new AMD 5th Gen EPYC CPUs and AMD Ryzen 9000 CPUs to compete with Intel’s server and consumer side. Today, AMD also announced a surprising competitor to Nvidia’s Blackwell GPU. The new AMD Instinct MI325X is AMD’s latest AI chip built on the latest AMD CDNA 3 architecture.

AMD continues to deliver on our roadmap, offering customers the performance they need and the choice they want, to bring AI infrastructure, at scale, to market faster,”… With the new AMD Instinct accelerators, EPYC processors and AMD Pensando networking engines, the continued growth of our open software ecosystem, and the ability to tie this all together into optimized AI infrastructure, AMD underscores the critical expertise to build and deploy world class AI solutions

-Forrest Norrod, executive vice president and general manager, Data Center Solutions Business Group, AMD

AMD Instinct MI325X Extends Leading AI Performance

AMD Instinct MI325X accelerators sport 25GB HBM3E memory support 6TB/s bandwidth offering 1.8X more capacity and 1.3x more bandwidth than the Nvidia H200 accelerator. Compared to the H200, the MI325X offers 1.3x higher peak theoretical FP16 and FP8 compute performance.

AI demand has actually continued to take off and actually exceed expectations. It’s clear that the rate of investment is continuing to grow everywhere

-Lisa Su, AMD CEO

AMD CDNA 4 Architecture Preview

AMD also previewed its next-generation AMD Instinct MI350 series accelerators based on the AMD CDNA 4 architecture. These upcoming GPUs are designed to deliver a 35x improvement over the current CDNA 3-based accelerators. The AMD Instinct MI350 series will have 288GB of HBM3E memory per accelerator and will be available during the second half of 2025.

AMD Next-Gen AI Networking

AMD introduces two new solutions for efficient data management in AI infrastructure: AMD Pensando Salina DPU for front-end networks and the AMD Pensando Pollara 400 AI NIC for back-end networks. The Salina DPU represents AMD’s third-generation programmable DPU, boasting double the performance, bandwidth, and scalability of its predecessor. With 400G throughput, it ensures rapid data transfer to AI clusters, optimizing efficiency and security for data-intensive AI applications.

AMD’s Pollara 400 is the industry’s first Ultra Ethernet Consortium (UEC) ready AI Network Interface Card (NIC) powered by the P4 Programmable engine. it supports next-generation RDMA software and fosters an open networking ecosystem. This makes it ideal for maximizing performance and scalability in accelerator-to-accelerator communication within back-end networks.

AMD Pensando Salina DPU and AMD Pensando Pollara 400 are sampling with customers in Q4’24 and are on track for availability in the first half of 2025.

New AMD AI Software for Generative AI

Paired with the new AI accelerators is AMD’s improved AI software capabilities with the latest release of its ROCm open software stack. This update focuses on enhancing performance and expanding support for key AI frameworks and models, particularly in the realm of generative AI.

The ROCm 6.2 release introduces vital AI features such as FP8 datatype, Flash Attention 3, and Kernel Fusion. These enhancements translate to substantial performance gains, with up to 2.4X improvement in inference and 1.8X in training for various large language models (LLMs) compared to the previous ROCm 6.0 version.

Key Improvements in ROCm 6.2:

- FP8 Datatype: Enables faster and more efficient processing of AI models.

- Flash Attention 3: Optimizes attention mechanisms in LLMs for improved performance.

- Kernel Fusion: Combines multiple operations into a single kernel for reduced overhead and faster execution.

AMD Instinct Accelerator Specs

| AMD Instinct Accelerators Specs | ||||||

| MI325X | MI300X | MI250X | MI100 | |||

| Compute Units | 304 | 304 | 2 x 110 | 120 | ||

| Matrix Cores | 1216 | 1216 | 2 x 440 | 480 | ||

| Stream Processors | 19456 | 19456 | 2 x 7040 | 7680 | ||

| Boost Clock | 2100MHz | 2100MHz | 1700MHz | 1502MHz | ||

| FP64 Vector | 81.7 TFLOPS | 81.7 TFLOPS | 47.9 TFLOPS | 11.5 TFLOPS | ||

| FP32 Vector | 163.4 TFLOPS | 163.4 TFLOPS | 47.9 TFLOPS | 23.1 TFLOPS | ||

| FP64 Matrix | 163.4 TFLOPS | 163.4 TFLOPS | 95.7 TFLOPS | 11.5 TFLOPS | ||

| FP32 Matrix | 163.4 TFLOPS | 163.4 TFLOPS | 95.7 TFLOPS | 46.1 TFLOPS | ||

| FP16 Matrix | 1307.4 TFLOPS | 1307.4 TFLOPS | 383 TFLOPS | 184.6 TFLOPS | ||

| INT8 Matrix | 2614.9 TOPS | 2614.9 TOPS | 383 TOPS | 184.6 TOPS | ||

| Memory Clock | ~5.9 Gbps HBM3E | 5.2 Gbps HBM3 | 3.2 Gbps HBM2E | 2.4 Gbps HBM2 | ||

| Memory Bus Width | 8192-bit | 8192-bit | 8192-bit | 4096-bit | ||

| Memory Bandwidth | 6TB/sec | 5.3TB/sec | 3.2TB/sec | 1.23TB/sec | ||

| VRAM | 288GB (8x36GB) | 192GB (8x24GB) | 128GB (2x4x16GB) | 32GB (4x8GB) | ||

| ECC | Yes (Full) | Yes (Full) | Yes (Full) | Yes (Full) | ||

| Infinity Fabric Links | 7 (896GB/sec) | 7 (896GB/sec) | 8 | 3 | ||

| TDP | 750W? | 750W | 560W | 300W | ||

| GPU | 8x CDNA 3 XCD | 8x CDNA 3 XCD | 2x CDNA 2 GCD | CDNA 1 | ||

| Transistor Count | 153B | 153B | 2 x 29.1B | 25.6B | ||

| Manufacturing Process | XCD: TSMC N5 IOD: TSMC N6 | XCD: TSMC N5 IOD: TSMC N6 | TSMC N6 | TSMC 7nm | ||

| Architecture | CDNA 3 | CDNA 3 | CDNA 2 | CDNA (1) | ||

| Form Factor | OAM | OAM | OAM | PCIe | ||

| Launch Date | Q4’2024 | 12/2023 | 11/2021 | 11/2020 | ||

Grant is a Financial Management graduate from UST. His passion for gadgets and tech crossed him over in the industry where he could apply his knowledge as an enthusiast and in-depth analytic skills as a Finance Major. His passion allows him to earn at the same time help Gadget Pilipinas' readers in making smart, value-based decisions and purchases with his reviews and guides.